AMD announced today that users can localize GPT based Large Language Models (LLM) to build exclusive artificial intelligence chatbots.

They stated that users can use AMD’s new XDNA NPU and Radeon RX 7000 series GPU devices with built-in AI acceleration cores to run LLM and AI chatbots locally on Ryzen 7000 and Ryzen 8000 series APUs.

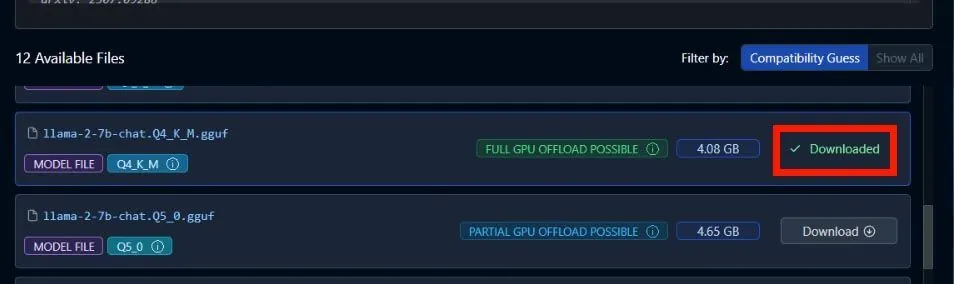

The announcement provides a detailed introduction to the operation steps, such as running Mistral with 7 billion parameters, searching and downloading “TheBlock/OpenHermes-2.5-Multil-7B-GGUF”; If running 7 billion parameter LLAMA v2, search and download “TheBlock/Lama-2-7B Chat GGUF”.

AMD is not the first company to do so, and NVIDIA has recently launched “Chat with RTX”, an artificial intelligence chatbot supported by the GeForce RTX 40 and RTX 30 series GPUs. It utilizes the TensorRT-LLM feature set for acceleration and provides rapidly generated artificial intelligence results based on localized datasets.