At the GTC Developer Conference, NVIDIA launched the DGX SuperPOD, which handles trillion-parameter AI models, providing stable performance output for ultra-large-scale generative AI training and inference workloads.

The new DGX SuperPOD features a high-efficiency water-cooled rack-mounted architecture. It includes the NVIDIA DGX GB200 system. This configuration provides 11.5 exaflops of AI computing power and 240 terabytes of memory. Customers can expand further by adding racks.

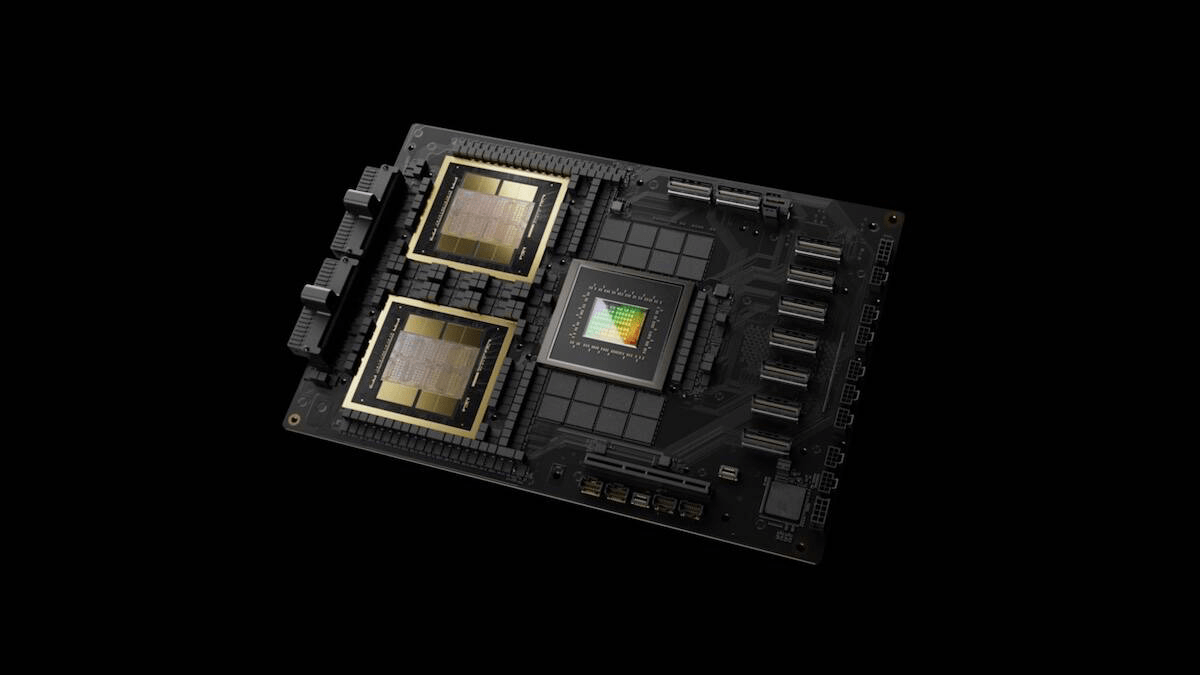

Each DGX GB200 system has 36 NVIDIA GB200 accelerator cards, including 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell GPUs, connected into a supercomputer through the fifth-generation NVIDIA NVLink.

Compared with NVIDIA H100 Tensor Core GPU, the GB200 accelerator card can improve the performance of large language model inference workloads by up to 30 times.

DGX SuperPOD contains 8 or more DGX GB200 systems, connected through NVIDIA Quantum InfiniBand, and can be expanded to tens of thousands of GB200 accelerator cards.

The default configuration currently provided by Nvidia to customers includes 576 Blackwell GPUs, connected to 8 DGX GB200 systems through NVLink.

Jensen Huang, founder and CEO of NVIDIA, said:

NVIDIA DGX artificial intelligence supercomputer is the factory of the artificial intelligence industrial revolution. The new DGX SuperPOD combines the latest advances in NVIDIA accelerated computing, networking and software to enable every company, industry and country to refine and generate their own artificial intelligence.